Managing Crawl Budget: A Guide

Managing crawl budget is crucial for website owners and SEO professionals alike. Crawl budget represents the capacity of search engine bots, like Googlebot, to crawl and index pages within a website over a specific period.

Optimizing crawl budget is instrumental in enhancing a website’s visibility and performance in search engine results. Key factors influencing crawl budget include website size, site speed, and content quality.

By comprehending these factors and implementing strategies to hone in crawl budget, website owners can ensure that their content is effectively crawled and indexed, thereby improving its chances of ranking higher in search engine results pages.

In this article, we discuss the key aspects every site owner needs to know about crawl budget, such as the two key elements that determine it, as well as the main factors that can affect it. We also explain the ways it affects your site’s SEO results, and share tips on how to make the best of it.

What Is a Crawl Budget?

Crawl budget is a crucial concept in the realm of SEO, defining the resources search engine bots allocate to crawl and index web pages within a specific timeframe. To understand crawl budget better, it’s essential to delve into how search engine bots discover and index web pages.

Search engine bots continuously scour the web to discover new and updated content. They follow links from one page to another, indexing the content they encounter along the way. However, not all pages on a website receive the same level of attention from these bots.

Imagine a small, family-owned bookstore that has been passed down through generations, nestled in the heart of a bustling city. The store has a humble website with a few pages that showcase its history, staff, and best-selling books. This virtual representation of the shop doesn’t require much upkeep, and neither does its search engine presence.

Much like our fictional bookstore, websites with a limited number of pages that don’t change frequently can focus on maintaining an up-to-date sitemap and regularly monitoring their index coverage. There’s no need to constantly worry about crawl budget—a concept that’s determined by crawl capacity limit and crawl demand.

Crawl capacity limit refers to the maximum number of URLs that Googlebot can crawl on a website within a given time frame, while crawl demand is determined by the number of URLs discovered and the frequency at which they change.

For our quaint bookstore, and other sites like it, maintaining a strong search engine presence can be achieved with a few simple steps. By ensuring that each page is reviewed, consolidated, and assessed for indexation after it has been crawled, even small sites can flourish in the vast landscape of the digital world.

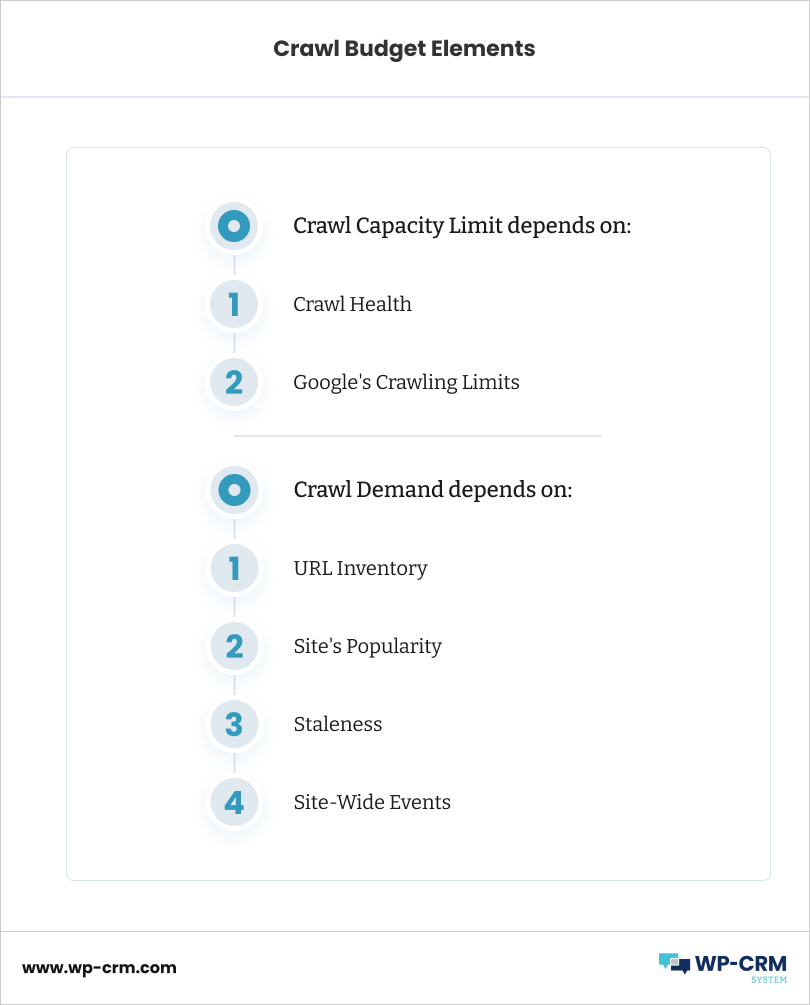

Crawl Budget Elements

Your website’s crawl budget is determined by two key elements, each with its own characteristics. Here they are:

Crawl Capacity Limit

The crawl capacity limit is the maximum number of simultaneous page crawls Googlebot can do on a site without overloading its servers, and the time between each of these parallel connections.

A website’s crawl capacity limit depends on its crawl health and Google’s own crawling limits.

For example, if your web pages load up quickly and there are no server errors, the crawl health increases, and more pages will be crawled and have a chance to get indexed. Google’s crawlers also have limited capacity which affects a site’s crawl capacity limit, too. All in all, not all pages on a website get crawled, and not all crawled pages get indexed.

Crawl Demand

Google always strives for the most relevant, engaging, and high-quality content to laser-match a search query and satisfy the user. Much like a public library, its size, the frequency of new additions, and the popularity of its materials determine how much time a “reader”, ak.a. Googlebot crawlers will spend exploring the shelves.

Crawlers dedicate as much time as necessary to crawl a site based on factors like its size, how often it’s updated, the quality of its content, and its relevance compared to other sites. These factors contribute to what’s known as crawl demand.

To illustrate, let’s consider the following elements that contribute to crawl demand:

- Perceived Inventory. Without specific instructions, Googlebot will attempt to crawl most URLs on a site. To avoid wasting Google’s crawling time on unnecessary or duplicate content, you can streamline your website structure. In our library analogy, this would involve maintaining an up-to-date catalog and ensuring duplicate copies of books aren’t mistakenly categorized as unique titles.

- Popularity. URLs with higher online popularity are crawled more often to keep their content fresh in search engine indexes. Think of popular bestsellers—they would be checked more frequently to ensure their availability and relevancy for patrons.

- Staleness. Google wants to recrawl documents frequently enough to capture any changes. In the context of our library, this would be akin to updating the catalog to reflect the latest editions of books or replacing worn-out copies with fresh prints.

- Site-Wide Events: Major website events, such as site moves, may increase crawl demand as Googlebot works to reindex content under new URLs. In our library scenario, this could be compared to reorganizing sections or updating the catalog when the layout changes.

By understanding and managing these factors, website owners can effectively optimize their site’s crawl demand and help Googlebot index content more efficiently.

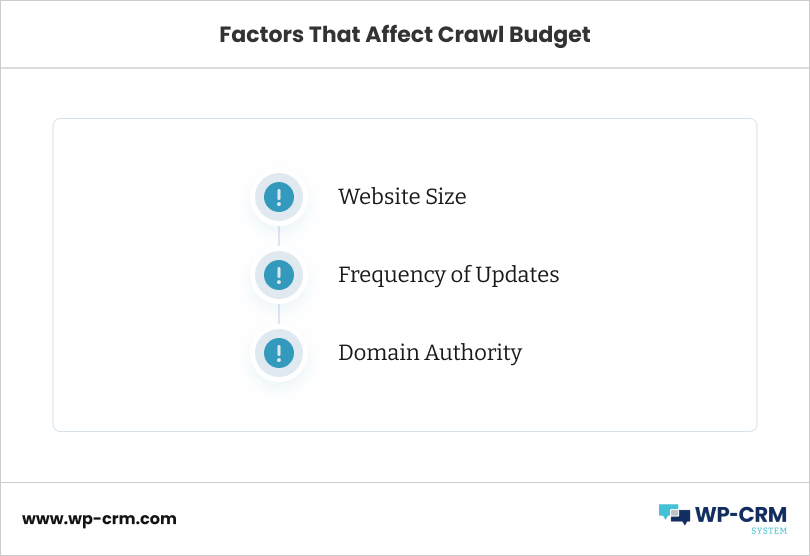

Factors That Affect Crawl Budget

Several factors influence crawl budget allocation:

Website Size

Larger websites with numerous pages may require more resources for crawling and indexing compared to smaller ones. The size of a website impacts how search engine bots prioritize which pages to crawl and how frequently.

Frequency of Updates

Websites that frequently update their content signal freshness to search engines. Consequently, search engine bots may allocate more crawl budget to such websites to ensure timely indexing of new content.

Domain Authority

Websites with higher domain authority, indicating credibility and relevance in their niche, may receive a larger share of crawl budget. Search engine bots prioritize crawling and indexing pages from authoritative domains to provide users with high-quality search results.

Crawl Budget for SEO: Here’s Why It Matters

Crawl budget optimization is pivotal for a website’s SEO performance as it directly influences how search engines discover, crawl, and index web pages. Understanding its significance can lead to tangible improvements in a website’s visibility and ranking in search engine results.

Efficient crawl budget allocation enables search engines to discover and index new content more rapidly. When search engine bots can crawl and index a website’s pages efficiently, it ensures that fresh and relevant content is promptly included in search engine results. This timely indexing enhances the website’s visibility, making it more likely to rank higher for relevant search queries.

Conversely, a poorly managed crawl budget can have adverse effects on a website’s SEO. Important pages may be overlooked, resulting in decreased visibility and potential loss of organic traffic. Moreover, outdated or irrelevant content may continue to be indexed, diluting the website’s relevance and authority to search engine algorithms.

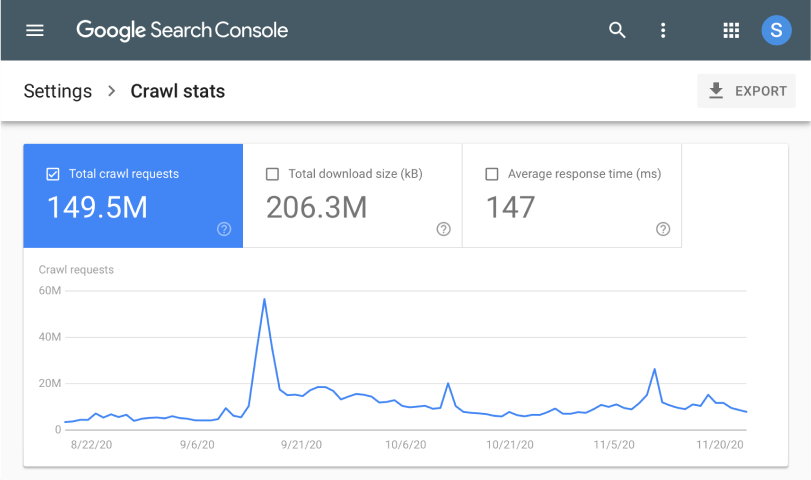

You can monitor your website’s crawl budget, and tweak the performance of your pages from the Google Search Console Crawl Stats Report.

To access it, simply follow the steps:

- Log in to your Google Search Console account.

- Navigate to the Crawl Stats Report. Once logged in, on the left-hand side menu, find and click on “Crawl Stats” under the “Index” section.

- Review the Overview. Assess the overview section of the Crawl Stats report. This section provides a summary of Googlebot’s activity on your site, like total number of requests, total download size, and average response time.

- Analyze Crawl Stats Trends. Look for any noticeable patterns. Pay attention to changes in crawl activity over time, such as increases or decreases in the number of requests or download size.

- Examine Crawl Rate. Assess the crawl rate graph to understand how Googlebot’s crawl activity fluctuates over time. Look for spikes or drops in crawl rate, as they may indicate changes in Googlebot’s behavior or your site’s performance.

- Evaluate Response Time. Review the response time graph to see how quickly your site responds to Googlebot’s requests. Ensure that your site’s response time remains within an acceptable range to avoid any crawl efficiency issues.

- Check Host Status. Verify the status of your site’s host server to ensure it’s functioning properly. Look for any connectivity issues or server errors that may affect Googlebot’s ability to crawl your site.

- Explore Data by File Type. Use the “File type” tab to view crawl stats data categorized by different file types (e.g., HTML, CSS, JavaScript). Analyze how Googlebot interacts with each file type and identify any potential issues or optimizations.

- Review Data by Response Code. Utilize the “Response code” tab to examine crawl stats data based on different HTTP response codes (e.g., 200, 404, 500). Identify any pages returning error codes (e.g., 404 Not Found) and take corrective actions to resolve them.

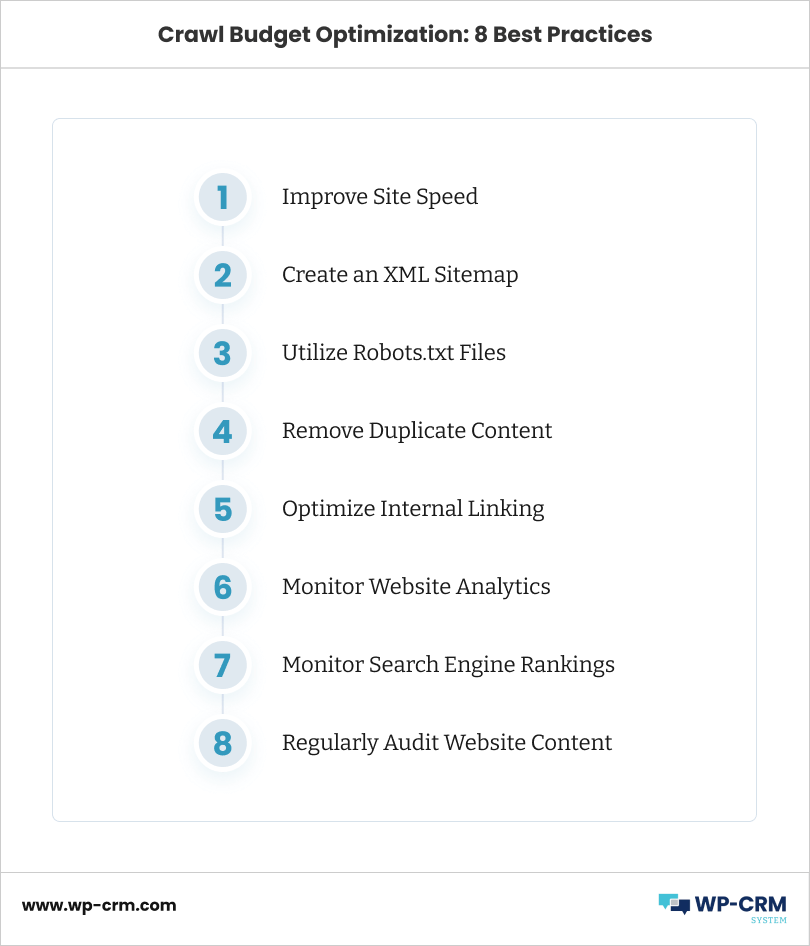

Crawl Budget Optimization: 8 Best Practices

Optimizing crawl budget is essential for ensuring that search engine bots efficiently crawl and index a website’s content. By implementing best practices for crawl budget optimization, website owners can improve their website’s visibility, search engine rankings, and overall SEO performance. Here are several practical tips and strategies for optimizing crawl budget:

1. Improve Site Speed

Site speed plays a crucial role in crawl budget optimization. Faster-loading websites are more likely to be crawled and indexed more frequently by search engine bots. To improve site speed, optimize images, minify CSS and JavaScript files, enable browser caching, and leverage content delivery networks (CDNs). Conduct regular speed tests using tools like Google PageSpeed Insights or GTmetrix to identify and address performance issues.

2. Create an XML Sitemap

An XML sitemap serves as a roadmap for search engine bots, guiding them to important pages on the website. By creating and submitting an XML sitemap to search engines, website owners can ensure that all relevant pages are discovered and crawled efficiently. Include important URLs, such as main pages, product pages, and blog posts, in the XML sitemap. Regularly update the XML sitemap to reflect any changes or additions to the website’s content.

3. Utilize Robots.txt Files

Robots.txt files allow website owners to control which pages search engine bots can crawl and index. Use robots.txt directives to specify crawl directives for different sections of the website. For example, disallow crawling of low-value pages, such as admin directories or duplicate content, to conserve crawl budget for more important pages. Regularly review and update the robots.txt file to ensure it accurately reflects the website’s crawling preferences.

4. Remove Duplicate Content

Duplicate content can waste crawl budget by diluting the relevance of a website’s pages in search engine results. Identify and address duplicate content issues by implementing canonical tags, 301 redirects, or using the rel=”nofollow” attribute for non-essential pages. Consolidate similar or redundant pages to reduce duplicate content and ensure that search engine bots prioritize crawling and indexing original, high-quality content.

5. Optimize Internal Linking

Internal linking plays a crucial role in crawl budget optimization by guiding search engine bots to important pages within the website. Use descriptive anchor text and logical site structure to facilitate efficient crawling and indexing of content. Prioritize internal links to high-value pages, ensuring that they are easily accessible and prominent within the website’s navigation and content.

6. Monitor Website Analytics

Regularly monitor website analytics to assess the impact of crawl budget optimization efforts on search engine visibility and performance. Track metrics such as crawl rate, indexed pages, and organic traffic to evaluate the effectiveness of optimization strategies. Analyze trends and identify areas for improvement based on data insights.

7. Monitor Search Engine Rankings

Monitor search engine rankings to gauge the impact of crawl budget optimization on the website’s visibility in search engine results pages (SERPs). Track keyword rankings, click-through rates (CTRs), and organic traffic to measure the effectiveness of optimization efforts. Adjust strategies based on changes in search engine rankings and performance metrics.

8. Regularly Audit Website Content

Conduct regular content audits to identify and remove outdated, low-quality, or irrelevant content from the website. By pruning unnecessary pages, website owners can streamline their website’s content and focus crawl budget on high-value, relevant content. Use tools like Google Analytics and Google Search Console to identify underperforming pages and prioritize content optimization efforts.

What About the Crawl Budget for Big Sites?

Managing crawl budgets on large websites presents unique challenges due to the sheer volume of pages that need to be crawled and indexed regularly. With thousands or even millions of pages, website owners must employ strategic approaches to ensure efficient crawl budget allocation.

One challenge for large websites is ensuring that all content is crawled and indexed regularly. To address this, website owners can prioritize their content based on importance and relevance. High-value pages, such as product pages or cornerstone content, should receive priority for crawling and indexing, while less critical pages may be crawled less frequently.

Pagination is another technique that can help manage crawl budget on large websites. By breaking content into multiple pages, website owners can prevent search engine bots from crawling excessively long pages in one go. Implementing pagination controls, such as rel=”next” and rel=”prev” tags, helps search engine bots understand the sequence of paginated content and crawl it more efficiently.

Faceted navigation, commonly used in e-commerce websites, allows users to filter and navigate through large datasets. However, it can lead to the creation of numerous URL variations, potentially diluting crawl budget. Website owners can address this issue by implementing canonical tags to consolidate similar or duplicate content and prevent unnecessary crawling of faceted navigation URLs.

Dynamic rendering is another useful technique for managing crawl budget on large websites. By serving pre-rendered HTML snapshots to search engine bots, website owners can ensure that content generated dynamically through JavaScript is properly crawled and indexed. Dynamic rendering helps streamline the crawling process and conserves crawl budget for other critical pages.

Final Words

Understanding and optimizing crawl budget is vital for website performance and SEO success. Evaluate your website’s crawl budget and implement the best practices discussed, such as improving site speed and utilizing XML sitemaps. Remember, crawl budget management is an ongoing process. Continuously monitor and adjust strategies to achieve optimal results. By prioritizing crawl budget optimization, you can enhance your website’s visibility, search engine rankings, and overall SEO performance.